Watch a child playing alone with dolls or action figures, and in almost all cases you’ll hear a very lively discussion, with many different voices used and personas assumed. Are we hardwired to talk to non-sentient objects? Can it be as satisfying to interact with a chatbot as a human? If all you want is a fast, accurate answer – of course.

A HubSpot survey tells us that as long as they can get help quickly and easily, 40% of consumers don’t care whether a chatbot or a person answers their customer service questions. Gartner predicts that by 2020, the average person will have more conversations with bots than with their spouse (I think this stat is more revealing on the state of marriages than the use of augmented intelligence). Is this the end of world as we know it (cue Michael Stipe), or a golden age of convenience?

What, if anything, are we giving up when we interact with AI rather than a person? Or is that even the right question to be asking?

A long time ago in a galaxy far, far away—actually, it only feels that way. In truth, it was at UC Irvine just before the dawn of personal computers. The computer science department needed an English graduate student to grade papers for a class called “Ethics in Computer Science.” There was no coding, no labs, the required reading was Joseph Weizenbaum’s Computer Science and Human Reason, and I was the grad student.

Considered by many the father of artificial intelligence, Weizenbaum had created ELIZA, a natural language processing program that mimicked Rogerian therapy. He named it for the flower seller in Pygmalion (perhaps better known as My Fair Lady). Reduced to its basics, a Rogerian therapist lets the patient arrive at his or her own conclusion by restating what they are told (“How are you feeling today?” “I’m a little blue.” “So, you’re unhappy?”). In his book, Weizenbaum recounts the time his secretary at MIT asked him to leave the room while she “spoke” with ELIZA. Even with full knowledge that ELIZA was a program, Weizenbaum’s secretary was having a personal and powerful interaction. And she was simply typing! Now that we talk to machines, the connection is much more intimate.

The CS students were to write papers on the difference between decision-making as a purely computational exercise and the uniquely human qualities that lead to choice (whether these are truly unique is the subject of a much larger discussion). I dutifully graded their papers, marked one a “C” that probably deserved a “D” and received an office hour visit from that student. He wanted a better grade. I went over all the flaws in logic, all the grammatical mistakes, what he could do to re-write it for a better grade.

“English doesn’t matter,” he said. “Coding will rule the world.”

“People will always speak to each other,” I said.

I think we were both right.

What we choose to hand over to machines

I do not know my own son’s phone number because I have never dialed it. It’s been programmed in my phone forever. While likely typical today, this still strikes me as strange. But handing over certain brain functions (mostly data storage) has been going on a long time.

A few thousand years ago, Homer (if there was a Homer) was never read—he was heard. Rhapsodes (yes, it’s the root of the word rhapsody) roamed the countryside and recited, or more accurately, sang the Iliad and the Odyssey from memory, as well as other histories of the Trojan War that are lost to us. Let me repeat that: from memory. These rhapsodes were not freaks of nature. Indeed, cultures without a written language studied in more modern times also share the recitation of long and complex oral histories.

Do we, in a sense, voluntarily relinquish certain brain functions to technologies: tablets, scrolls, books, recordings, integrated circuits? And can the adoption of these technologies lead to a re-wiring of our brains? Does this freedom from mundane tasks allow us to be more creative? Is brainpower a zero-sum game? A Forbes article titled AI By The Numbers cites this stat from PwC: 34% of business executives say that the time freed up from using digital assistants allows them to focus on deep thinking and creating.

From the same Forbes piece, we get this stat from Business Insider: 48% of consumers worldwide said they preferred a chatbot that solved their issue over a chatbot that had a personality.

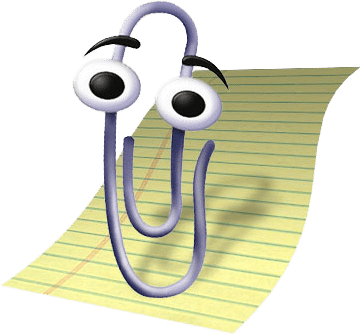

Remember Clippy?

No one needs to design personality into our assistants; the user naturally supplies it. For years, we’ve called our cars “he” or “she.” Anthropomorphism is common and is even cited as a sign of social intelligence.

Of course, with any sea change there is dislocation. Again, from Business Insider:

“Twenty-nine percent of customer service positions in the US could be automated through chatbots and other tech, according to Public Tableau. We estimate this translates to $23 billion in savings from annual salaries, which does not even factor in additional workforce costs like health insurance.”

When I see the words savings in annual salaries I also see men and women at the unemployment office. Yes, in the long run, freedom from menial work means opportunity for more rewarding work and greater responsibility. And as we go from “augmented intelligence” to “autonomous intelligence,” the tasks that can be given to machines continues to grow. Now that programs can “see,” what other jobs will be ceded to computer vision? The London Police initiated a specialized unit called the “Super-Recognizers” just two years ago, comprised of people with an uncanny ability to recognize faces. I think a face-off between them and Google’s AutoML AI system would be a better show than Ken Jennings versus IBM’s Watson on Jeopardy was.

All of which brings me back to ethics. As we rush to commercialize advances in AI, I was surprised to learn there is an organization called the Partnership on Artificial Intelligence to Benefit People and Society, Partnership on AI for short. It’s founding partners are Amazon, Apple, Google, DeepMind, Facebook, IBM and Microsoft. Other partners include the ACLU, Human Rights Watch, Amnesty International, McKinsey, and Accenture. Interesting bedfellows. They were “established to study and formulate best practices on AI technologies, to advance the public’s understanding of AI, and to serve as an open platform for discussion and engagement about AI and its influences on people and society.” I know I’m now sleeping better. Alexa, wake me when it’s over.